Implementing (soft) data contracts for Terraform stacks

Once you arrive in your Infrastructure as Code journey at a point where you layered your Terraform stacks and may have individual stacks for each service, you have to exchange data. This post explains a simple setup for exchanging structured data between stacks and how to establish a soft contract between up- and downstream services.

Please check my talk “lessons learned from running terraform at reasonable scale” for an introduction of the concept as we are starting off there (read until “(1.3.5) Downsides of strong decoupling”).

TLDR;

Links

- Talk: sigterm-de/terraform-gitops-talk

- TF module sources: sigterm-de/terraform-gitops-talk/modules

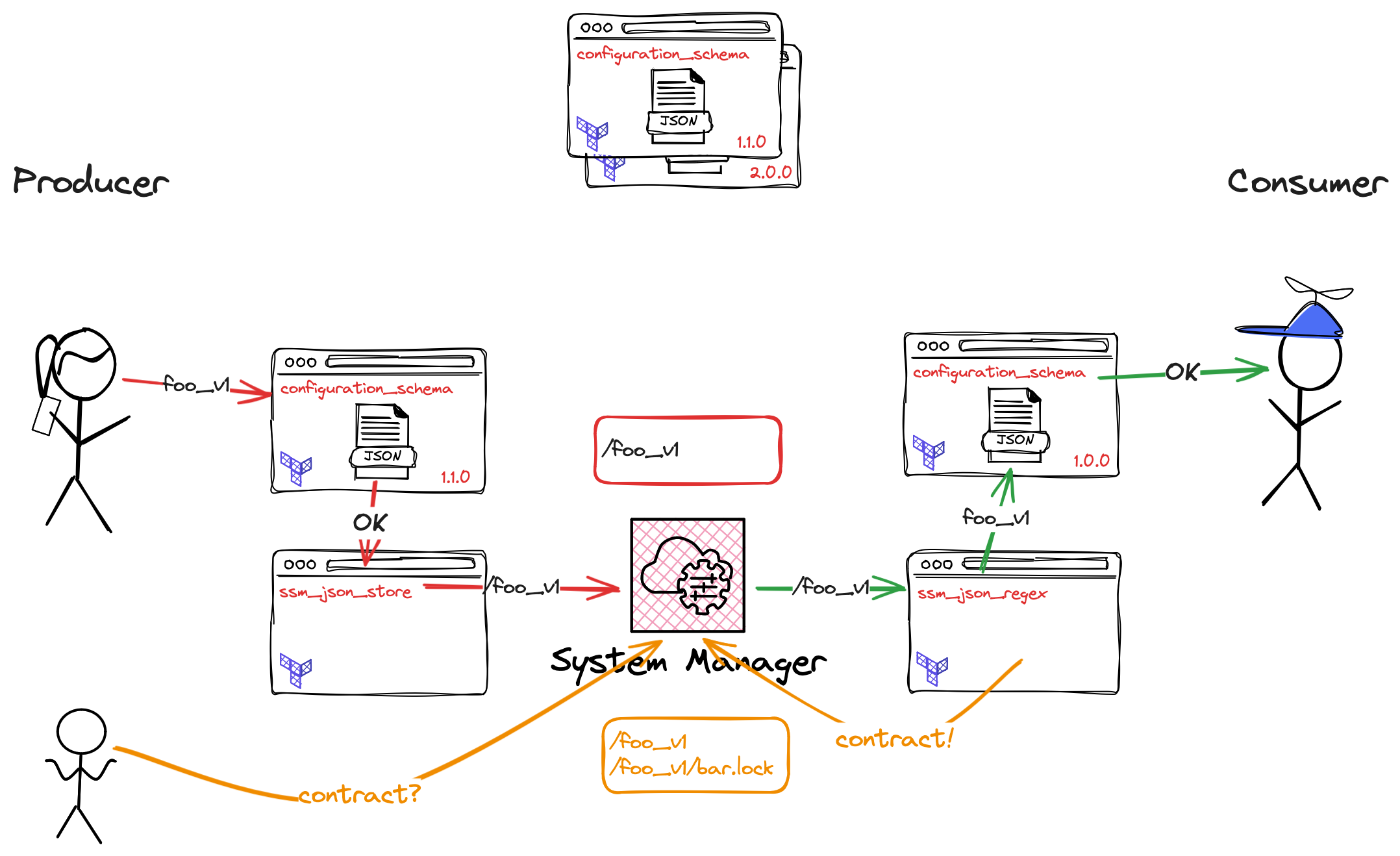

Indirect information exchange - an implicit data contract

Simply put, an upstream service (producer) stores information in a convenient store (eg. ssm or s3 on AWS) and a downstream service (consumer) consumes them to parameterise itself. (you can find example Terraform modules in the mentioned talk repository; url at the bottom)

By consuming the structured data, producers and consumers establish a relationship where one depends on the other and changes to the data must be coordinated - a data contract.

Sadly this contract is not visible nor enforcable. For APIs we have pacts but for plain data there is no standard tooling available.

Pre-requisites - data quality

Another annoyance is the missing data quality gate. Each producer can publish data in individual formats and each consumer has to check for the correct fields.

By defining a common format in JSON Schema, producers can add a check at write time and consumers can trust the data received (even implement a verification if need be).

How’s that, you are asking? Search no further - modules and examples are provided

JSON Schema

The below (partial) schema represents the example configuration data in hcl.

{

"$id": "https://raw.githubusercontent.com/sigterm-de/terraform-gitops-talk/main/modules/configuration_schema/schema.json",

"$schema": "https://json-schema.org/draft/2020-12/schema",

"title": "Configuration data object",

"type": "object",

"properties": {

"installed": {

"type": "boolean"

},

"private": {

"type": "object",

"properties": {

"database" : {

"type": "object",

"additionalProperties": {

"$ref": "#/$defs/database"

}

}

}

},

"public": {

"type": "object",

"properties": {

"sns": {

"type": "object",

"additionalProperties": {

"$ref": "#/$defs/name_arn"

}

},

"sqs": {

"type": "object",

"additionalProperties": {

"$ref": "#/$defs/name_arn_url"

}

}

}

}

},

"required": [

"installed",

"private",

"public"

],

"$comment": "more data definitions are omitted, see full source below 👇"

}

Full source: https://raw.githubusercontent.com/sigterm-de/terraform-gitops-talk/main/modules/configuration_schema/schema.json

HCL data

locals {

service = "foo"

foo_v1 = {

installed = true

private = {

database = {

foo = {

name = "module.database.database_name"

username = "module.database.database_username"

secret_key = "module.database.secret_key"

endpoint = "module.database.endpoint"

reader_endpoint = "module.database.reader_endpoint"

port = 5432

}

}

}

public = {

sqs = {

foo = {

name = "foo"

url = "http://example.com/foo"

arn = ""

}

}

sns = {}

}

}

}

Usage

# (1) load the schema version and validate the input data

module "validated_data" {

source = "registry.example.com/foo/configuration_schema/any"

version = "1.0.0"

data = local.foo_v1

}

# (2) write the validated data into the data store

module "upstream_ssm" {

source = "registry.example.com/foo/ssm_json_store/aws"

name = local.service

path = "/configuration"

data = module.validated_data.validated

}

Voilá, versioned schema, validated data - happy consumers ;-)

The soft contract

As each consumer uses a Terraform module to load producer data into it’s realm, we can make use of these mechanics to

leave a <service>.lock at the source data.

Each producer can then check (manually or automated) which consumer depends on it.

Action

# (1) load necessary data from upstream/producers

module "ssm_data" {

source = "registry.example.com/foo/ssm_json_regex/aws"

version = "0.1.0"

path = "/configuration"

include_filter_regex = "(base|foo_v1)"

# Soft data contract

# leave a `.lock` at the read parameters and document consumption of the data

lock = local.service

}

# --- inside the `ssm_json_regex` module ------------------------------

# (2) also leave a `.lock` entry at read

resource "aws_ssm_parameter" "lock" {

for_each = var.lock != null ? local.filtered_paths : toset([])

name = "${each.key}/${var.lock}.lock"

type = "String"

value = "true"

overwrite = true

tags = { "Provisioner" = "Terraform" }

}

Result

/configuration/base

/configuration/base/consumer.lock

/configuration/foo_v1

/configuration/foo_v1/consumer.lock

API versioning

Changes in the Configuration data object must be versioned. Following good semantic versioning practice and keeping the schema compatible within a major version makes it easy to use version identifiers.

In my above example I already used versioning for the producers data -> foo_v1. (The common notation of foo/v1 is not

usable in our case as the consuming modules use / as a path divider to provide the correct key in a map)

The technical schema is versioned with the Terraform module configuration_schema and use of version = "~> 1.0.0"

Utilising the now known consumers, a producer can publish v2.0.0 data in eg. foo_v2 and after all locks on foo_v1

are released, these deprecated data can be dropped from Terraform.

In short

- (p) publish

foo_v1 - (c) consume

foo_v1and leave a lockfoo_v1/consumer.lock - data schema

v2is published - (p) publish additional

foo_v2data and deprecatefoo_v1 - (p) approach all

.lockholders - (c) switch to

foo_v2, release lock onfoo_v1and lock onfoo_v2(handled by TF code) - (p) as no

.lockis held onfoo_v1, drop the data

Summary

Applying the described pattern gives teams confidence of produced and consumed data by very little operational overhead.

Why don’t you try it now?

- Talk: sigterm-de/terraform-gitops-talk

- TF module sources: sigterm-de/terraform-gitops-talk/modules